Abstract

We show that the distribution of (a suitable rescaling of) a single eigenvalue gap \(\lambda _{i+1}(M_n)-\lambda _i(M_n)\) of a random Wigner matrix ensemble in the bulk is asymptotically given by the Gaudin–Mehta distribution, if the Wigner ensemble obeys a finite moment condition and matches moments with the GUE ensemble to fourth order. This is new even in the GUE case, as prior results establishing the Gaudin–Mehta law required either an averaging in the eigenvalue index parameter \(i\), or fixing the energy level \(u\) instead of the eigenvalue index. The extension from the GUE case to the Wigner case is a routine application of the Four Moment Theorem. The main difficulty is to establish the approximate independence of the eigenvalue counting function \(N_{(-\infty ,x)}(\tilde{M}_n)\) (where \(\tilde{M}_n\) is a suitably rescaled version of \(M_n\)) with the event that there is no spectrum in an interval \([x,x+s]\), in the case of a GUE matrix. This will be done through some general considerations regarding determinantal processes given by a projection kernel.

Similar content being viewed by others

1 Introduction

Given an \(n \times n\) Hermitian matrix \(M_n\), we let

be the \(n\) eigenvalues of \(M_n\) in non-decreasing order, counting multiplicity. The purpose of this paper is to study the eigenvalue gaps \(\lambda _{i+1}(M_n) - \lambda _i(M_n)\) of such matrices when \(M_n\) is drawn from the Gaussian Unitary Ensemble (GUE), or more generally from a Wigner random matrix ensemble, in the asymptotic limit \(n \rightarrow \infty \) and for a single \(i = i(n)\) in the bulk region \({\varepsilon }n \le i \le (1-{\varepsilon }) n\).

To begin with, let us set out our notational conventions for GUE and Wigner ensembles:

Definition 1

(Wigner and GUE) Let \(n \ge 1\) be an integer (which we view as a parameter going off to infinity). An \(n \times n\) Wigner Hermitian matrix \(M_n\) is defined to be a random Hermitian \(n \times n\) matrix \(M_n = (\xi _{ij})_{1 \le i,j \le n}\), in which the \(\xi _{ij}\) for \(1 \le i \le j \le n\) are jointly independent with \(\xi _{ji} = \overline{\xi _{ij}}\) (in particular, the \(\xi _{ii}\) are real-valued), and each \(\xi _{ij}\) has mean zero and variance one. We say that the Wigner matrix ensemble obeys condition C1 with constant \(C_0\) if one has

for some constant \(C\) (independent of \(n\)).

A GUE matrix \(M_n\) is a Wigner Hermitian matrix in which \(\xi _{ij}\) is drawn from the complex gaussian distribution \(N(0,1)_\mathbb{C }\) (thus the real and imaginary parts are independent copies of \(N(0,1/2)_\mathbb{R }\)) for \(i \ne j\), and \(\xi _{ii}\) is drawn from the real gaussian distribution \(N(0,1)_\mathbb{R }\).

A Wigner matrix \(M_n = (\xi _{ij})_{1 \le i,j \le n}\) is said to match moments to \(m^{{\text{ th}}}\) order with another Wigner matrix \(M^{\prime }_n= (\xi ^{\prime }_{ij})_{1\le i,j \le n}\) for some \(m \ge 1\) if one has

whenever \(a,b \in \mathbf{N }\) with \(a+b \le m\).

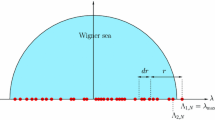

The bulk distribution of the eigenvalues \(\lambda _1(M_n),\ldots ,\lambda _n(M_n)\) of a Wigner (and in particular, GUE) matrix is governed by the Wigner semicircle law. Indeed, if we let \(N_I(M_n)\) denote the number of eigenvalues of \(M_n\) in an interval \(I\), and we assume Condition C1 for some \(C_0>2\), then with probabilityFootnote 1 \(1-o(1)\), we have the asymptotic

uniformly in \(I\), where \(\rho _{\text{ sc}}\) is the Wigner semi-circular distribution

see e.g. [1]. Informally, this law indicates that the eigenvalues \(\lambda _i(M_n)\) are mostly contained in the interval \([-2\sqrt{n},2\sqrt{n}]\), and for any energy level \(u\) in the bulk region \(-2+{\varepsilon }\le u \le 2-{\varepsilon }\) for some fixed \({\varepsilon }>0\), the average eigenvalue spacing should be \(\frac{1}{\sqrt{n} \rho _{\text{ sc}}(u)}\) near \(\sqrt{n} u\).

Now let \(M_n\) be drawn from GUE. The distribution of the eigenvalues \(\lambda _1(M_n),\ldots ,\lambda _n(M_n)\) are then well-understood. If we define the \(k\)-point correlation functions \(\rho ^{(n)}_k: \mathbb{R }^k \rightarrow \mathbb{R }^+\) for \(0 \le k \le n\) to be the unique symmetric continuous function for which

for any continuous function \(F\) which is compactly supported in the region \(\{ x_1 \le \cdots \le x_k \}\), then one has the well-known formula of Dyson [9]

and the Gaudin–Mehta formula

where \(K^{(n)}(x,y)\) is the kernel

and \(P_0(x), P_1(x), \ldots \) are the \(L^2\)-normalised orthogonal polynomials with respect to the measure \(e^{-x^2/2}\ dx\) (and are thus essentially Hermite polynomials); see e.g. [19] or [1]. In particular, the functions \(P_k(x) e^{-x^2/4}\) for \(i=0,\ldots ,n-1\) are an orthonormal basis to the subspace \(V^{(n)}\) of \(L^2(\mathbb{R }) = L^2(\mathbb{R }, dx)\) spanned by \(x^i e^{-x^2/4}\) for \(i=0,\ldots ,n-1\), thus the orthogonal projection \(P^{(n)}\) to this subspace is given by the formula

for any \(f \in L^2(\mathbb{R })\).

Applying the inclusion-exclusion formula, the Gaudin–Mehta formula implies that for any intervalFootnote 2 \(I\), the probability \(\mathbf{P }(N_I(M_n)=0)\) that \(M_n\) has no eigenvalues in \(I\), where \(N_I(M_n)\) is the number of eigenvalues of \(M_n\) in \(I\), is equal to

One can also express this probability as a Fredholm determinant

where we view the indicator function \(1_I\) as a multiplier operator on \(L^2(\mathbb{R })\).

The asymptotics of \(K^{(n)}\) as \(n \rightarrow \infty \) are also well understood, especially in the bulk of the spectrum,Footnote 3 which in this normalisation corresponds to the interval \([(-2+{\varepsilon })\sqrt{n}, (2-{\varepsilon })\sqrt{n}]\) for any fixed \({\varepsilon }>0\). Indeed, if \(-2+{\varepsilon }< u < 2+{\varepsilon }\) and \(x, y\) are bounded uniformly in \(n\), then from the Plancherel-Rotarch asymptotics for Hermite polynomials one has

where \(K_\mathrm{Sine}\) is the Dyson sine kernel

with the usual convention that \(K(x,x)=1\); see e.g. [1, 19], or [8, Corollary 1]. Note that the normalisations in (2) are consistent with the heuristic, from the Wigner semi-circular law, that the mean eigenvalue spacing at \(u\sqrt{n}\) is \(\frac{1}{\rho _{\text{ sc}}(u)\sqrt{n}}\). We observe that the Dyson sine kernel is also the kernel to the orthogonal projection \(P_\mathrm{Sine}\) to those functions \(f \in L^2(\mathbb{R }, dx)\) whose Fourier transform

is supported on the interval \([-1/2,1/2]\).

From (2) and some careful treatment of error terms (see e.g. [1, Chapter 3]) one obtains that

or in Fredholm determinant form,

Note that the kernel \(K_\mathrm{Sine}(x,y) 1_I(y)\) of \(P_\mathrm{Sine}1_I\) is square-integrable, and so \(P_\mathrm{Sine}1_I\) is in the Hilbert–Schmidt class, and so \(1_I P_\mathrm{Sine}1_I = (P_\mathrm{Sine}1_I)^* (P_\mathrm{Sine}1_I)\) is trace class.

This asymptotic can in turn be used to control the distribution of the averaged gap spacing distribution. Indeed, if \(1 < t_n < n\) is any sequence such that \(1/t_n, t_n/n = o(1)\), then for any \(-2+{\varepsilon }< u < 2-{\varepsilon }\) and \(s > 0\) independent of \(n\), the quantity

has the asymptotic

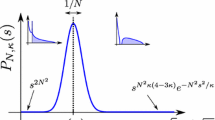

where \(p\) is the Gaudin distribution (or Gaudin–Mehta distribution)

or equivalently

see [7] for details. The quantity \(\det (1 - 1_{[0,y]} P_\mathrm{Sine}1_{[0,y]})\) (and hence the Gaudin distribution \(p(y)\)) can also be expressed in terms of a solution to a Painlevé V ordinary differential equation. More precisely, one has

where \(\sigma \) solves the ODE

with boundary condition \(\sigma (x) \sim -\frac{x}{\pi }\) as \(x \rightarrow 0\); see [15] (or the later treatment in [30]). Among other things, this implies that the Gaudin distribution \(p\) and all of its derivatives areFootnote 4 smooth, bounded, and rapidly decreasing on \((0,+\infty )\).

We also remark that the extreme values of the gaps \(\lambda _{i+1}(M_n)-\lambda _i(M_n)\) are also well understood; see [2]. However, our focus here will be on the bulk distribution of these gaps rather than on the tail behaviour.

In [25], a Four Moment Theorem for the eigenvalues of Wigner matrices was established, which roughly speaking asserts that the fine scale statistics of these eigenvalues depend only on the first four moments of the coefficients of the Wigner matrix, so long as some decay condition (such as Condition C1) is obeyed. In particular, by applying this theorem to the asymptotic (3) for GUE matrices, one obtains

Corollary 2

The asymptotic (3) is also valid for Wigner matrices \(M_n\) which obey Condition C1 for some sufficiently large absolute constant \(C_0\), and which match moments with GUE to fourth order.

Proof

See [25, Theorem 9]. Strictly speaking, the arguments in that paper require an exponential decay hypothesis on the coefficients on \(M_n\) rather than a finite moment condition, because the four moment theorem in that paper also has a similar requirement. However, the refinement to the four moment theorem established in the subsequent paper [26] (or in the later papers [17, 27]) relaxes that exponential decay condition to a finite moment condition. \(\square \)

We remark that the moment matching hypothesis in this corollary can in fact be removed by combining the above argument with some similar results obtained (by a different method) in [10, 16]; see [11].

The Wigner semi-circle law predicts that the location of an individual eigenvalue \(\lambda _i(M_n)\) of a Wigner or GUE matrix \(M_n\) for \(i\) in the bulk region \({\varepsilon }n \le i \le (1-{\varepsilon }) n\) should be approximately \(\sqrt{n} u\), where \(u = u_{i/n}\) is the classical location of the eigenvalue, given by the formula

Indeed, it is a result of Gustavsson [14] that \(\frac{\lambda _i(M_n) - \sqrt{n} u}{\sqrt{\log n/2\pi ^2}/\sqrt{n} \rho _{\text{ sc}}(u)}\) converges in distribution to the standard real Gaussian distribution \(N(0,1)_\mathbb{R }\), or more informally that

Note that the standard deviation \(\frac{\sqrt{\log n/2\pi ^2}}{\sqrt{n} \rho _{\text{ sc}}(u)}\) here exceeds the mean eigenvalue spacing \(\frac{1}{\sqrt{n} \rho _{\text{ sc}}(u)}\) by a factor comparable to \(\sqrt{\log n}\). If one heuristically applies this approximation (6) to the gap distribution law (3), one is led to the conjecture that the normalised eigenvalue gap

should converge in distribution to the Gaudin distribution, in the sense that

for any fixed \(s>0\).

Unfortunately, this is not quite a rigorous proof of (7). The problem is that the asymptotic (3) involves not just a single eigenvalue gap \(\lambda _{i+1}-\lambda _i\), but is instead an average over all eigenvalue gaps near the energy level \(\sqrt{n} u\). By (6), one is then forced to consider the contributions of at least \(\gg \sqrt{\log n}\) different values of \(i\) that could contribute to (3). One would of course expect the behaviour of \(\lambda _{i+1}-\lambda _i\) for adjacent values of \(i\) to be essentially identical, in which case one could pass from the averaged gap distribution (3) to the individual gap distribution (7). However, it is a priori conceivable (though admittedly quite strange) that there is non-trivial dependence on \(i\), for instance that \(\lambda _{i+1}-\lambda _i\) might tend to be larger than predicted by the Gaudin distribution for even \(i\), and smaller than predicted for odd \(i\), with the two effects canceling out in averaged statistics such as (3), but not in non-averaged statistics such as (7).

Our main result rules out such a pathological possibility:

Theorem 3

(Individual gap spacing) Let \(M_n\) be drawn from GUE, and let \({\varepsilon }n \le i \le (1-{\varepsilon }) n\) for some fixed \({\varepsilon }>0\). Then one has the asymptotic (7) for any fixed \(s>0\), where \(u = u_{i/n}\) is given by (5).

Applying the four moment theorem from [25] (with the extension to the finite moment setting in [26]), one obtains an immediate corollary:

Corollary 4

The conclusion of Theorem 3 is also valid for Wigner matrices \(M_n\) which obey Condition C1 for some sufficiently large absolute constant \(C_0\), and which match moments with GUE to fourth order.

Proof

This can be established by repeating the proof of [25, Theorem 9] (in fact the argument is even simpler than this, because one is working with a single eigenvalue gap rather than with an average, and can proceed more analogously to the proof of [25, Corollary 21]). We omit the details. \(\square \)

In view of the results in [11], it is natural to conjecture that the moment matching condition can be removed. Following [11], it would be natural to use heat flow methods to do so, in particular by trying to extend Theorem 3 to the gauss divisible ensembles studied in [16]. However, the methods in this paper rely very heavily on the determinantal form of the joint eigenvalue distribution of GUE (and not just on control of the \(k\)-point correlation functions); the formulae in [16] also have some determinantal structure, but it is unclear to us whether this similarity of structure is sufficient to replicate the arguments.Footnote 5 On the other hand, we expect analogues Theorem 3 to be establishable for other ensembles with a determinantal form, such as GOE and GSE, or to more general \(\beta \) ensembles involving a non-quadratic potential for the classical values \(1,2,4\) of \(\beta \). We will not pursue these matters here.

The key to proving Theorem 3 lies in establishing the approximate independenceFootnote 6 of the eigenvalue counting function \(N_{(-\infty ,x)}(\tilde{M}_n)\) from the event that \(\tilde{M}_n\) has no eigenvalues in a short interval \([x,x+s]\) (i.e. that \(N_{[x,x+s]}(\tilde{M}_n)=0\)), where \(\tilde{M}_n\) is a suitably rescaled version of \(M_n\). Roughly speaking, this independence, coupled with a central limit theorem for \(N_{(-\infty ,x)}(\tilde{M}_n)\), will imply that the distribution of a gap \(\lambda _{i+1}(M_n)-\lambda _i(M_n)\) is essentially invariant with respect to small changes in the \(i\) parameter. To obtain this approximate independence, we use the properties of determinantal processes, and in particular the fact that a determinantal point process \(\Sigma \), when conditioned on the event that a given interval such as \([x,x+s]\) contains no elements of \(\Sigma \), remains a determinantal point process (though with a slightly different kernel). The main difficulty is then to ensure that the new kernel is close to the old kernel in a suitable sense (more specifically, we will compare the two kernels in the nuclear norm \(S^1\)).

We thank Peter Forrester, Van Vu, and the anonymous referee for corrections.

2 Notation

In this paper, \(n\) will be an asymptotic parameter going to infinity. A quantity is said to be fixed if it does not depend on \(n\); if a quantity is not specified as fixed, then it is permitted to vary with \(n\). Given two quantities \(X, Y\), we write \(X = O(Y), X \ll Y\), or \(Y \gg X\) if we have \(|X| \le CY\) for some fixed \(C\), and \(X = o(Y)\) if \(X/Y\) goes to zero as \(n \rightarrow \infty \).

An interval will be a connected subset of the real line, which may possibly be half-infinite or infinite. If \(I\) is an interval, we use \(I^c := \mathbb{R }\backslash I\) to denote its complement.

We use \(\sqrt{-1}\) to denote the imaginary unit, in order to free up the symbol \(i\) for other purposes, such as indexing eigenvalues.

Given a bounded operator \(A\) on a Hilbert space \(H\), we denote the operator norm of \(A\) as \(\Vert A\Vert _{\text{ op}}\). We will also need the Hilbert–Schmidt norm (or Frobenius norm)

with the convention that this norm is infinite if \(A^* A\) or \(AA^*\) is not trace class. Similarly, we will need the Schatten 1-norm (or nuclear norm)

which is finite when \(A\) is trace class. Note that if \(A\) is compact with non-zero singular values \(\sigma _1,\sigma _2,\ldots \) then we have

Indeed, one should view the operator, Hilbert–Schmidt, and nuclear norms as non-commutative versions of the \(\ell ^\infty , \ell ^2\), and \(\ell ^1\) norms respectively.

For us, the reason for introducing the nuclear norm \(S^1\) is that it controls the trace:

On the other hand, the Hilbert–Schmidt and operator norms are significantly easier to estimate than the nuclear norm. To bridge the gap, we will rely heavily on the non-commutative Hölder inequalities

see e.g. [4]. We will use these inequalities in this paper without further comment.

We remark that for integral operators

on \(L^2(\mathbb{R })\) for locally integrable \(K\), the Hilbert–Schmidt norm of \(T\) is given by

when the right-hand side is finite.

3 Some general theory of determinantal processes

In this section we record some of the theory of determinantal processes which we will need. We will not attempt to exhaustively describe this theory here, referring the interested reader to the surveys [21, 23] or [20] instead. We will also not aim for maximum generality in this section, restricting attention to determinantal processes on \(\mathbb{R }\), whose associated locally trace class operator \(P\) will usually be an orthogonal projection, and often of finite rank.

Define a good kernel to be a locally integrable function \(K: \mathbb{R }\times \mathbb{R }\rightarrow \mathbb{C }\), such that the associated integral operator

can be extended from \(C_c(\mathbb{R })\) to a self-adjoint bounded operator on \(L^2(\mathbb{R })\), with spectrum in \([0,1]\). Furthermore, we require that \(P\) be locally trace class in the sense that for every compact interval \(I\), the operator \(1_I P 1_I\) is trace class; this will for instance be the case if \(K\) is smooth. If \(K\) is a good kernel, then (as was shown in [18, 23]; see also [20] or [1]), \(K\) defines a point process \(\Sigma \subset \mathbb{R }\), i.e. a random subsetFootnote 7 of \(\mathbb{R }\) that is almost surely locally finite, with the \(k\)-point correlation functions

for any \(k \ge 0\), thus

for any disjoint intervals \(I_1,\ldots ,I_k\). This process is known as the determinantal point process with kernel \(K\).

The distribution of a determinantal point process in an interval \(I\) is described by the following lemma:

Lemma 5

Let \(\Sigma \) be a determinantal point process on \(\mathbb{R }\) associated to a good kernel \(K\) and associated operator \(P\). Let \(I\) be a compact interval, and suppose that the operator \(1_I P 1_I\) has non-zero eigenvalues \(\lambda _1, \lambda _2, \ldots \in (0,1]\). Then \(\# (\Sigma \cap I)\) has the same distribution as \(\sum _i \xi _i\), where the \(\xi _i\) are jointly independent Bernoulli random variables, with each \(\xi _i\) equalling \(1\) with probability \(\lambda _i\) and \(0\) with probability \(1-\lambda _i\). In particular, one has

and

and

Proof

See e.g. [1, Corollary 4.2.24]. \(\square \)

As a corollary of Lemma 5, we see that \(\mathbf{P }( \#(\Sigma \cap I) = 0 ) > 0\) unless \(P\) has an eigenfunction of eigenvalue \(1\) that is supported on \(I\).

An important special case of determinantal point processes arises when the operator \(P\) is an orthogonal projection of some finite rank \(n\), which is the situation with the GUE point process \(\{ \lambda _1(M_n),\ldots ,\lambda _n(M_n)\}\), which as discussed in the introduction is a determinantal point process with kernel \(K^{(n)}\) given by (1). In this case, the hypotheses on \(P\) (i.e. self-adjoint trace class with eigenvalues in \([0,1]\)) are automatically satisfied, and the determinantal point process \(\Sigma \) is almost surely a set of cardinality \(n\); see e.g. [20, 23] or [1]. In this situation, the \(k\)-point correlation functions \(\rho _k\) vanish for \(k>n\), and for \(k<n\) we have the Gaudin lemma

which allows one to recursively obtain the correlation functions from the \(n\)-point correlation function \(\rho _n\) (which is essentially the joint density function of the \(n\) elements of \(\Sigma \)). Note that (9) in fact holds for any point process whose cardinality is almost surely \(n\), if the process is almost surely simple with locally integrable correlation functions.

If \(V\) is the \(n\)-dimensional range of \(P\), and \(\phi _1,\ldots ,\phi _n\) is an orthonormal basis for \(V\), then the kernel \(K\) of the orthogonal projection \(P\) can be expressed explicitly as

and thus (by the basic formula \(\det (A^* A)= |\det (A)|^2\))

This leads to the following consequence:

Proposition 6

(Exclusion of an interval) Let \(\Sigma \) be a determinantal process associated to the orthogonal projection \(P_V\) to an \(n\)-dimensional subspace \(V\) of \(L^2(\mathbb{R })\). Let \(I\) be a compact interval, and suppose that no non-trivial element of \(V\) is supported in \(I\). Then the event \(E := ( \#(\Sigma \cap I) = 0 )\) occurs with non-zero probability, and upon conditioning to this event \(E\), the resulting random variable \((\Sigma |E)\) is a determinantal point process associated to the orthogonal projection \(P_{1_{I^c} V}\) to the \(n\)-dimensional subspace \(1_{I^c} V\) of \(L^2(\mathbb{R })\).

Proof

This is a continuous variant of [21, Proposition 6.3], and can be proven as follows. By construction, \(P_V\) has no eigenvector of eigenvalue \(1\) supported in \(I\), and so \(\mathbf{P }(E) = \det (1 - 1_I P_V )\) is non-zero. The point process \((\Sigma |E)\) clearly has cardinality \(n\) almost surely, and is thus described by its \(n\)-point correlation function, which is a constant multiple of

which by (10) can be written as

where \(\phi _1,\ldots ,\phi _n\) is an orthonormal basis for \(V\).

By hypothesis on \(V, \phi _1 1_{I^c},\ldots ,\phi _n 1_{I^c}\) is a (not necessarily orthonormal) basis for \(1_{I^c} V\). By row operations, we can thus write (11) as a constant multiple of

where \(\phi ^{\prime }_1,\ldots ,\phi ^{\prime }_n\) is an orthonormal basis for \(1_{I^c} V\). But this is the \(n\)-point correlation function for the determinantal point process of \(P_{1_{I^c} V}\). As the \(n\)-point correlation function of an \(n\)-point process integrates to \(n!\) (cf. (9)), we see that the \(n\)-point correlation function of \((\Sigma |E)\) must be exactly equal to that of the determinantal point process of \(P_{1_{I^c} V}\), as claimed. \(\square \)

It is likely that the above proposition can be extended to infinite-dimensional projections (possibly after imposing some additional regularity hypotheses), but we will not pursue this matter here.

We saw in Lemma 5 that if \(\Sigma \) is a determinantal point process and \(I\) is a compact interval, then the random variable \(\# (\Sigma \cap I)\) is the sum of independent Bernoulli random variables. If the variance of this sum is large, then such a sum should converge to a gaussian, by the central limit theorem. Examples of such central limit theorems for \(\# (\Sigma \cap I)\) were formalised in [6, 24]. We will need a slight variant of these theorems, which gives uniform convergence on the probability density function of \(\# (\Sigma \cap I)\) as opposed to the probability distribution function.

Lemma 7

(Discrete density version of central limit theorem) Let \(X = \xi _1 + \cdots + \xi _n\) be the sum of independent Bernoulli random variables \(\xi _1,\ldots ,\xi _n\), with mean \(\mathbf{E }X = \mu \) and variance \(\mathbf{Var}X = \sigma ^2\) for some \(\sigma > 0\). Then for any integer \(m\), one has

One can improve the error term here to \(O(\sigma ^{-2})\) with a little more effort, but any error better than \(1/\sigma \) will suffice for our purposes.

Proof

We may assume that \(\sigma \) is larger than any given absolute constant, as the claim is trivial otherwise. We use the Fourier-analytic method. Write \(p_i := \mathbf{E }\xi _i\), then

and

Observe that \(X\) has characteristic function

and so

We can rewrite this integral slightly as \(A + B\), where

and

We first control \(A\). From Taylor expansion one has

in this regime, and so by (12)

We therefore have

Since

and

and

(say), we conclude that

Now we control \(B\). Elementary computation shows that

in this regime for some absolute constant \(c>0\). By (12), we may thus bound

and so \(B = O(\sigma ^{-1.7})\). Combining this with (13), the claim follows. \(\square \)

Combining Lemma 5, Proposition 6, and Lemma 7 we immediately obtain

Corollary 8

Let \(\Sigma \) be a determinantal point process on \(\mathbb{R }\) whose kernel \(P\) is an orthogonal projection to an \(n\)-dimensional subspave \(V\) of \(L^2(\mathbb{R })\). Let \(I\) be a compact interval, and \(m\) be an integer. Then

where

and

Furthermore, if \(J\) is another compact interval disjoint from \(J\), such that no non-trivial element of \(V\) is supported on \(J\), then

where

and

and \(\tilde{P}\) is the orthogonal projection to \(1_{J^c} V\).

Let the notation be as in the above corollary. In our application, we will need to determine the extent to which events such as \(\# (\Sigma \cap I) = m\) and \(\# (\Sigma \cap J) = 0\) are independent. In view of Corollary 8, it is then natural to determine the extent to which the projection \(\tilde{P}\) differs from that of \(P\).

Observe that as no non-trivial element of \(V\) is supported on \(J\), the operator \(P 1_{J^c} P\), viewed as a map from \(V\) to \(V\), is invertible. Denoting its inverse on \(V\) by \((P 1_{J^c} P)_V^{-1}\), we then see that the operator \(1_{J^c} P (P 1_{J^c} P)_V^{-1} P 1_{J^c}\) is self-adjoint, idempotent, and has \(1_{J^c} V\) as its range, and so must be equal to \(\tilde{P}\):

We can also write \((P 1_{J^c} P)_V^{-1}\) as \((1 - P 1_J P)_V^{-1}\) (since \(P\) is the identity on \(V\)). Thus, by Neumann series, we formally have the expansion

This expansion is convergent for sufficiently small \(J\), but does not necessarily converge for \(J\) large. However, in practice we will be able to invert \(1-P1_J P\) by a perturbation argument involving the Fredholm alternative. More precisely, in our application, the finite rank projection \(P\) will be “close” in some weak sense to an infinite rank projection \(P_0\) (in our application, \(P_0\) will be the Dyson projection \(P_\mathrm{Sine}\)), projecting to some infinite-dimensional Hilbert space \(V_0\). We will assume \(P_0\) to be locally trace class, so that \(P_0 1_J P_0\) is compact. If we assume that no non-trivial element of \(V_0\) is supported on \(J\), then the Fredholm alternative (see e.g. [22, Theorem VI.14]) then implies that \(1 - P_0 1_J P_0: V_0 \rightarrow V_0\) is invertible, with inverse \((1 - P_0 1_J P_0)_{V_0}^{-1} = 1 + K_0\) for some compact operator \(K_0\), thus

on \(L^2(\mathbb{R })\). As \(1-P_0 1_J P_0\) is self-adjoint and is the identity on \(V_0\), we see that \(K_0\) has range in \(V_0\) and cokernel in \(V_0^\perp \), thus

One then expects \(1 + PK_0P: V \rightarrow V\) to be an approximate inverse to \(1-P1_J P\). Indeed, we have

where

Meanwhile, from (15) we have

and thus

Let us now bound some norms of \(E\). As the projection operator \(P\) has an operator norm of at most \(1\), one has

splitting \(P_0 1_J P_0 - P 1_J P\) as \((P_0-P) 1_J P_0 - P 1_J (P_0 - P)\) we conclude that

If we now make the hypothesis that

then we have \(\Vert E\Vert _{\text{ op}} \le 1/2\), and so we have the Neumann series

In particular,

To bound the right-hand side, we use the triangle inequality to obtain

Factorising \(P_0 1_J P_0 = (1_J P_0)^* (1_J P_0)\) and similarly for \(P 1_J P\), we conclude that

Note that \(E\) maps \(V\) to itself, and so \((1+E)^{-1}\) can also be viewed as an operator from \(V\) to itself (being the identity on \(V^\perp \)). From (16) one then has

and thus

Applying (14), we conclude that

To deal with the \(PK_0P\) term we observe from (17) and the factorisation \(P_0 1_J P_0 = (1_J P_0)^* (1_J P_0)\) that

and so

We summarise the above discussion as a proposition:

Proposition 9

(Approximate description of \(\tilde{P}\)) Let \(P\) be a projection to an \(n\)-dimensional subspace \(V\) of \(L^2(\mathbb{R })\), and let \(J\) be a compact interval such that no non-trivial element of \(V\) is supported on \(J\). Let \(P_0\) be a projection to a (possibly infinite-dimensional) subspace \(V_0\) of \(L^2(\mathbb{R })\) which is locally trace class, and such that no non-trivial element of \(V_0\) is supported on \(J\). Let \(K_0: L^2(\mathbb{R }) \rightarrow L^2(\mathbb{R })\) be the compact operator solving (15) that is provided by the Fredholm alternative. Suppose that

Let \(\tilde{P}\) be the orthogonal projection to \(1_{J^c} V\). Then

Because the \(S^1\) norm controls the trace, this proposition allows us to compare the quantities \(\tilde{\mu },\tilde{\sigma }^2\) from Corollary 8 with their counterparts \(\mu ,\sigma ^2\):

Corollary 10

Let \(n, P, V, J, P_0, K_0\) be as in Proposition 9 (in particular, we make the hypothesis (19)). Let \(I\) be a compact interval disjoint from \(J\), and let \(\mu ,\sigma ^2,\tilde{\mu },\tilde{\sigma }^2\) be as in Corollary 8. Then we have

and

where \(M\) is the quantity

In practice, this corollary will allow us to show that the random variable \(\#(\Sigma \cap I)\) is essentially independent of the event \(\#(\Sigma \cap J)=0\) for certain determinantal point processes \(\Sigma \) and disjoint intervals \(I,J\).

4 Proof of main theorem

We are now ready to prove Theorem 3. We may of course assume that \(n\) is larger than any given absolute constant.

Let \(n, M_n, {\varepsilon }, i, u\) be as in Theorem 3, and let \(X\) be the random variable

Clearly \(X\) takes values in \(\mathbb{R }^+\) almost surely. Our task is to show that

for all fixed \(s>0\), or equivalently that

for all fixed \(s>0\).

It will suffice to show that

for all fixed \(s>0\), since on applying this with two choices \(0 < s_1 < s_2\) of \(s\), subtracting, and then dividing by \(s_2-s_1\) we see that

letting \(s_1, s_2\) approach a given value \(s\) from the left or right, we then conclude the bounds

for any fixed \(\delta >0\), and (20) follows from the monotone convergence theorem.

It remains to prove (21). By (4), the left-hand side of (21) can be written as

Meanwhile, if we introduce the normalised random matrix

then we have

For any fixed choice of \(\tilde{M}_n\), we observe the identity

since the set of real numbers \(x\) for which \(N_{(-\infty ,x)}(\tilde{M}_n)=i \wedge N_{[x,x+s]}(\tilde{M}_n)=0\) holds is an interval of length \(X-s\) when \(X>s\), and empty otherwise. Taking expectations and using the Fubini-Tonelli theorem, we conclude that

Our task is thus to show that

Let \(t_n := \log ^{0.6} n\) (say). We will shortly establish the following claims:

-

(i)

(Tail estimate) We have

$$\begin{aligned} \int _{|x| \ge t_n} \mathbf{P }( N_{(-\infty ,x)}(\tilde{M}_n)=i )\ dx = o(1). \end{aligned}$$(23) -

(ii)

(Approximate independence) For \(|x| < t_n\), one has

$$\begin{aligned} \mathbf{P }( N_{(-\infty ,x)}(\tilde{M}_n)&= i \wedge N_{[x,x+s]}(\tilde{M}_n)=0 )\nonumber \\&= \mathbf{P }( N_{(-\infty ,x)}(\tilde{M}_n)=i ) \mathbf{P }( N_{[x,x+s]}(\tilde{M}_n)=0)\nonumber \\&+ O( \log ^{-0.85} n ). \end{aligned}$$(24) -

(iii)

(Gap probability at fixed energy) For \(|x| < t_n\), one has

$$\begin{aligned} \mathbf{P }( N_{[x,x+s](\tilde{M}_n)=0} ) = \det (1 - 1_{[0,s]} P_\mathrm{Sine}1_{[0,s]}) + o(1). \end{aligned}$$(25) -

(iv)

(Central limit theorem) For \(|x| < t_n\), one has

$$\begin{aligned} \mathbf{P }( N_{(-\infty ,x)}(\tilde{M}_n)=i ) = \frac{1}{\sqrt{2\pi } \sigma } e^{-x^2/2\sigma ^2} + O( \log ^{-0.85} n ) \end{aligned}$$(26)where \(\sigma := \sqrt{\log n/2\pi ^2}\).

Let us assume these estimates for the moment. From (24), (25), (26) one has

for \(|x| \le t_n\). Since

we conclude from the choice of \(t_n\) that

and the claim (22) then follows from (23).

It remains to establish the estimates (23), (24), (25), (26). We begin with (23). We can rewrite

From the rigidity of eigenvalues of GUE (seeFootnote 8 e.g. [28, Corollary 5]) we know that

(say) unless

Because of this, to prove (23) we may restrict to the regime where \(x = O(\log ^{O(1)} n)\).

ByFootnote 9 Lemma 5, for any real number \(y, N_{(-\infty ,y)}(M_n)\) is the sum of \(n\) independent Bernoulli variables. The mean and variance of such random variables was computed in [14]. Indeed, from [14, Lemma 2.1] one has (after adjusting the normalisation)

while from [14, Lemma 2.3] one has

Renormalising (and using the hypothesis \(x = O(\log ^{O(1)} n)\)), we conclude that

and

Applying Bennet’s inequality (see [3]), we conclude that

for some absolute constant \(c>0\), which gives (23). The bound (26) follows from the same computations, using Lemma 7 (or Corollary 8) in place of Bennet’s inequality.

The estimate (25) is well known (seeFootnote 10 e.g. [1, Theorem 3.1.1]); for future reference we remark that this estimate also implies the crude lower bound

for \(n\) sufficiently large. We therefore turn to (24). By (27) and (26), it suffices to establish the conditional probability estimate

We now turn to (28). Recall that the eigenvalues of \(M_n\) form a determinantal point process with kernel \(K^{(n)}\) given by (1). Rescaling this, we see that the eigenvalues of \(\tilde{M}_n\) form a determinantal point process with kernel \(\tilde{K}^{(n)}\) given by the formula

This is the kernel of an orthogonal projection \(\tilde{P}^{(n)}\) to some \(n\)-dimensional subspace \(\tilde{V}^{(n)}\) in \(L^2(\mathbb{R })\). The elements of this subspace consist of polynomial multiples of a gaussian function, and in particular there is no non-trivial element of \(\tilde{V}^{(n)}\) that vanishes on \([x,x+s]\). Applying Corollary 8 (and a truncation argument to deal with the non-compact nature of \((-\infty ,x)\)), one has

where

and

and \(P^{\prime }\) is the orthogonal projection to \(1_{[x,x+s]^c} \tilde{V}^{(n)}\). To establish (28), it will thus suffice to establish the bounds

and

To do this, we will use Corollary 10, with \(J := [x,x+s]\), and the role of \(P_0\) being played by the Dyson projection \(P_\mathrm{Sine}\). From the well-known fact that a non-trivial function and its Fourier transform cannot both be compactly supported, we see that there is no non-trivial function in the range of \(P_\mathrm{Sine}\) supported in \(J\). As \(P_\mathrm{Sine}\) is locally trace class, we conclude from the Fredholm alternative (see e.g. [22, Theorem VI.14]) that the compact operator \(K_0\) defined by (15) exists. As \(K_0\) is independent of \(n\), we certainly haveFootnote 11

and similarly

By Corollary 10 (once again using a truncation argument to deal with the half-infinite nature of \((-\infty ,x)\)), it will thus suffice to show that

and

Since the Hilbert–Schmidt norm controls the operator norm, we see from (29) that (30), (31) will both follow from the bound

Using the integral kernels \(K_\mathrm{Sine}, \tilde{K}^{(n)}\) of \(P_\mathrm{Sine}, \tilde{P}^{(n)}\) and the compact nature of \(J\), it suffices to show that

uniformly for all \(y \in J\). In principle one could establish this bound from a sufficiently precise analysis of the asymptotics of Hermite polynomials (such as those given in [8]), but one can actually derive this bound from the standard convergence result (2) as follows. From (2) we know that \(\tilde{K}^{(n)}(x,y)\) converges locally uniformly in \(x,y\) to \(K_\mathrm{Sine}(x,y)\) as \(n \rightarrow \infty \), and so

for any fixed \(L\). Also, as \(P_\mathrm{Sine}, \tilde{P}^{(n)}\) are both projections, one has

and

From (2), one has

For any given \({\varepsilon }> 0\), one can find an \(L\) such that

and thus

But from (33) and the triangle inequality we have

and so

From this, (33), and (34) we conclude that

and the claim (32) follows by sending \({\varepsilon }\) to zero. The proof of Theorem 3 (and thus also Corollary 4) is now complete.

Notes

See Sect. 2 for the conventions for asymptotic notation such as \(o(1)\) that are used in this paper.

One can generalise this formula from intervals to arbitrary Borel measurable sets, but in our applications we will only need the interval case. Similarly for many of the other determinantal process identities used in this paper.

For the edge of the spectrum, one can control individual eigenvalues instead by the Tracy-Widom law [29]. There is however a transitional regime between the bulk and the edge which is not covered by either our results or by the Tracy-Widom law, e.g. when \(\min (i,n-i)\) is comparable to \(n^\theta \) for some fixed \(0<\theta <1\), and which may be worth further attention. Given that convergence to the sine kernel is also known in such regimes, one would expect the main results of this paper to extend to these settings, although to make this intuition rigorous would require some careful argument which we do not pursue here.

Indeed, the famous Wigner surmise predicts the reasonably accurate approximation \(p(x) \!\approx \! \frac{1}{2} \pi x e^{-\pi x^2/4}\); see e.g. [19].

By using heat flow methods such as the method of local relaxation flow [12], one can obtain control on energy-averaged correlation functions in this setting, and similarly for non-classical \(\beta \)-ensembles \(\beta \ne 1,2,4\) as was done recently in [5]. Such bounds are sufficient to obtain averaged gap information of the form (3) (at least for values of \(t_n\) that grow faster than logarithmic), but it is not obvious how to isolate a single eigenvalue gap to then obtain (7).

It may be surprising to the experts that the counting functions on \((-\infty ,x)\) and \([x,x+s]\) are approximately independent, as the intervals are adjacent. The point is that while there is a correlation between the two counting functions, the covariance between them is essentially of order \(O(1)\), whilst the variance of \(N_{(-\infty ,x)}\) is of order \(\log n\), and so the correlation between the two random variables is ends up being asymptotically negligible. To put it another way, most of the random fluctuation of \(N_{(-\infty ,x)}\) comes from the portion of the spectrum that is far away from \(x\), and this contribution will be almost completely decoupled from the spectrum at \([x,x+s]\).

Strictly speaking, a point process is permitted to have multiplicity, so that it becomes a multiset rather than a set. However, as we are restricting attention to kernels \(K\) which are locally integrable, the determinantal point processes we consider will be almost surely simple, in the sense that no multiplicity occurs.

Strictly speaking, Lemma 5 is not applicable as stated because \((-\infty ,y)\) is not a compact interval, but this can be addressed by the usual truncation argument, replacing \((-\infty ,y)\) with \((-M,y)\) and then letting \(M\) go to infinity, exploiting the exponential decay of \(M_n\). We omit the routine details.

Note that our bound here on \(\Vert K_0\Vert _{op}\) is ineffective, as it relies on the Fredholm alternative. However, it is quite probable that one can obtain an effective bound on \(K_0\) here by using a quantitative versions of Hardy’s uncertainty principle to give a more robust version of the assertion that a non-trivial function and its Fourier transform cannot both be compactly supported. We will not pursue this issue here.

References

Anderson, G., Guionnet, A., Zeitouni, O.: An introduction to random matrices. In: Cambridge Studies in Advanced Mathematics, vol. 118. Cambridge University Press, Cambridge (2010)

Ben Arous, G., Bourgade, P.: Extreme gaps between eigenvalues of random matrices, arXiv:1010.1294

Bennett, G.: Probability inequalities for the sum of independent random variables. J. Am. Stat. Assoc. 57, 33–45 (1962)

Bhatia, R.: Matrix Analysis. Springer, New York (1997)

Bourgade, P., Erdős, L., Yau, H.T.: Universality of General \(\beta \)-Ensembles, arXiv:1104.2272

Costin, O., Lebowitz, J.: Gaussian fluctuations in random matrices. Phys. Rev. Lett. 75(1), 69–72 (1995)

Deift, P., Kriecherbauer, T., McLaughlin, K.T.-R., Venakides, S., Zhou, X.: Uniform asymptotics for polynomials orthogonal with respect to varying exponential weights and applications to universality questions in random matrix theory. Comm. Pure Appl. Math. 52(11), 1335–1425 (1999)

Delyon, B., Yao, J.: On the spectral distribution of Gaussian random matrices. Acta Math. Sin. Eng. Ser. 22(2), 297–312 (2006)

Dyson, F.: The threefold way. Algebraic structure of symmetry groups and ensembles in quantum mechanics. J. Math. Phys. 3, 1199–1215 (1962)

Erdős, L., Peche, S., Ramirez, J., Schlein, B., Yau, H.-T.: Bulk universality for Wigner matrices, arXiv:0905.4176

Erdős, L., Ramirez, J., Schlein, B., Tao, T., Vu, V., Yau, H.-T.: Bulk universality for Wigner hermitian matrices with subexponential decay. Math. Res. Lett, arxiv:0906.4400 (to appear)

Erdős, L., Schlein, B., Yau, H.-T.: Universality of Random Matrices and Local Relaxation Flow, arXiv:0907.5605

Erdős, L., Yau, H.-T., Yin, J.: Rigidity of eigenvalues of generalized Wigner matrices, arXiv:1007.4652

Gustavsson, J.: Gaussian fluctuations of eigenvalues in the GUE. Ann. Inst. H. Poincaré Probab. Statist. 41(2), 151–178 (2005)

Jimbo, M., Miwa, T.: Tetsuji, Mori, Y., Sato, M.: Density matrix of an impenetrable Bose gas and the fifth Painlevé transcendent. Phys. D 1(1), 80–158 (1980)

Johansson, K.: Universality of the local spacing distribution in certain ensembles of Hermitian Wigner matrices. Comm. Math. Phys. 215(3), 683–705 (2001)

Knowles, A., Yin, J.: Eigenvector Distribution of Wigner Matrices, arXiv:1102.0057

Macchi, O.: The coincidence approach to stochastic point processes. Adv. Appl. Probab. 7, 83–122 (1975)

Mehta, M.L.: Random Matrices and the Statistical Theory of Energy Levels. Academic Press, New York (1967)

Hough, J., Krishnapur, M., Peres, Y., Virág, B.: Determinantal processes and independence. Probab. Surv. 3, 206–229 (2006)

Lyons, R.: Determinantal probability measures. Publ. Math. Inst. Hautes Études Sci. 98, 167–212

Reed, M., Simon, B.: Methods of modern mathematical physics. I. In: Functional Analysis, 2nd edn. Academic Press (Harcourt Brace Jovanovich, Publishers), New York (1980)

Soshnikov, A.: Determinantal random point fields. Russ. Math. Surv. 55, 923–975 (2000)

Soshnikov, A.: Gaussian limit for determinantal random point fields. Ann. Probab. 30(1), 171–187 (2002)

Tao, T., Vu, V.: Random matrices: universality of the local eigenvalue statistics. Acta Math. 206, 127–204 (2011)

Tao, T., Vu, V.: Random covariance matrices: university of local statistics of eigenvalues. Ann. Probab. (2012) (to appear)

Tao, T., Vu, V.: Random matrices: universal properties of eigenvectors. Random Matrices Theory Appl. (2012) (to appear)

Tao, T., Vu, V.: Random matrices: sharp concentration of eigenvalues (preprint). http://arxiv.org/pdf/1201.4789.pdf

Tracy, C., Widom, H.: On orthogonal and symplectic matrix ensembles. Commun. Math. Phys. 177, 727–754 (1996)

Tracy, C.A., Widom, H.: Introduction to random matrices. In: Helminck, G.F. (ed.) Geometric and Quantum Aspects of Integrable Systems. Lecture Notes in Physics, vol. 424. Springer, Berlin, pp. 103–130 (1993)

Author information

Authors and Affiliations

Corresponding author

Additional information

T. Tao is supported by NSF grant DMS-0649473.

Rights and permissions

About this article

Cite this article

Tao, T. The asymptotic distribution of a single eigenvalue gap of a Wigner matrix. Probab. Theory Relat. Fields 157, 81–106 (2013). https://doi.org/10.1007/s00440-012-0450-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00440-012-0450-3