Matroids: The Value of Abstraction

3. Multiple births

There are related concepts embedded inside graphs and vector spaces. Let us start with vector spaces.

Among the major ideas that linear algebra (the branch of mathematics concerned with the structure of the solutions of linear systems of equations and related phenomena) brings to studying the geometry of points and vectors are the notions of independence, dependence, base, and spanning set of a space. Intuitively, a collection of vectors (or points) I is independent if one can not obtain any vector (point) in the set I as a linear combination of the other points in I. A set of independent points, to which one can not add any additional vector (point) and still have an independent set is called a basis set of points. A basis (for a finite dimensional vector space) is a maximal independent set of vectors.

Intuitively, a collection of points S spans a space if any point in the space can be obtained as a special kind of algebraic sum of points in S. A set which spans a space may have the property that one can throw away elements from the set and still have a set that spans. One might seek minimal spanning sets. It turns out that such sets consist of independent elements. Thus a basis for a space is a set that spans the space and consists of independent elements. Vectors can be independent but if there are not enough of them, they will not span the space. Vectors can span a space but if there are too many of them, they will not be independent.

How can one capture these ideas in a rule or axiomatic structure? Suppose I is a set, which we will think of as the set of independent objects. Here are the rules we want the set I to obey:

(Independence 1) The set I is not empty.

(Independence 2) Every subset of a member of I is also a member of I.

Independence 2 involves the concept of being hereditary. Subsets of things having the property should also have the property. An analogy with more mundane things than sets would be that if one takes a piece of food and subdivides it into parts, one has something one can still eat (e.g. food).

(Independence 3) If X and Y are in I where X has one more element than Y, one can find an element x in X which is not in Y such that Y together with x is in I.

Note that independent sets in a vector space have this property. We have here some rules that abstract the notion of independence.

A matroid is now defined to consist of a finite set E and subsets of E which satisfy Independence 1 through Independence 3.

Example:

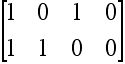

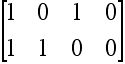

Consider the matrix A =  .

.

Number the columns of the matrix with the numbers 1, 2, 3, and 4, and let E be this set of column label numbers. We consider the following subsets of E:

You can verify that this collection of sets can serve as the independent setI for a matroid on the set E, by verifying that the axioms (rules) we have stated apply to this collection of sets.

The way the collection of sets above can be obtained is to check which sets of columns of the matrix A are linearly independent. This type of matroid is often called the vector matroid of the matrix A. Whereas a single non-zero column is linearly independent, in this example any pair of the columns numbered 1, 2, or 3 (the non-zero columns) is also independent. Note that the first three columns are not linearly independent. For the record, one theoretical (but not computationally attractive) way of telling if some set of k columns (none of which consists of all zeros) from a k-rowed matrix is linearly independent is to compute the determinant of the columns involved. If this determinant is non-zero, the columns are independent; otherwise, they are dependent. Also, notice that strictly speaking when one refers to linear independence, one has to specify a field of elements from which the scalars are taken when constructing the linear combinations. Two familiar examples of fields are the rational numbers and the real numbers. In our case we can, in essence, use any field. In particular, we can think of the columns of matrix A to have entries from the field of two elements. When a matroid arises as the vector matroid for a matrix with respect to the field of 2 elements, it is known as a binary matroid; W.T. Tutte (see next section) determined in 1958 exactly which matroids arise in this way. Now let us shift gears and look at some graph theory, which seems not on its surface to have much to do with vector spaces or independence.

The diagram below is a graph (or pseudograph, depending on different schools of definition). For the purposes of defining matroids from graphs we allow a vertex to be joined to itself (loops) and/or allow vertices which have multiple edges between them. For simplicity, I will consider only graphs which have the property that one can walk along the edges of the graph between any pair of vertices. Such graphs are called connected and are said to have a single component. The example shown here has the vertices labeled with letters and the edges labeled with numbers. This graph has 6 vertices and 10 edges.

If one starts at a vertex in our graph, then moves along previously unused edges and returns to the original vertex, one travels a circuit. In the graph above examples of circuits are: u, v, s, u; and v (via edge 1), w, v (via edge 2). Note that we will consider two circuits the same if they are listed in reverse cyclic order and that there are many ways of writing down the same circuit that look a bit different. Thus, s, w, x, t, s and w, x, t, s, w and w, s, t, x, w are all different notations for the same circuit.

Graphs have many applications: designing routes for street sweepers, showing relationships between workers and jobs they are qualified for, or coding the moves in a game. However, interest in graphs from the perspective of those who study matroids is not these applications per se, but certain underlying structural features that all graphs share. As a simple example of a structural theorem about graphs, consider the question:

How few edges can a connected graph with n vertices have? Some experimentation should convince you that the answer is (n-1) edges.

The concept of a matroid constructed from a graph (known as graphic matroids) starts with the set of edges of the graph together with the subsets of edges which form the circuits of the graphs (including circuits which are loops and formed from multiple edges).

Now that you are familiar with the concept of a circuit we can use a slightly simpler notation for them, which works without ambiguity. What we do is list the edges that make up the circuit. Loops show up with a single edge, and multiple edges define circuits with 2 edges. The edges in each circuit will be set off by parentheses rather than set brackets.

Here is a list of the circuits for the graph in Figure 1: (10), (3,4,5), (1,2), (2,5,6), (1,5,6), (3,4,6,2), (6,9,8,7), (3,4,9,8,7,2), (2,5,9,8,7), (1,5,9,8,7), and (3,4,6,1). There are 11 circuits.

We can try to abstract the properties of circuits in a graph in the same way that we abstracted the properties of being independent in a vector space. Suppose we have a collection of subsets C on a set E which obeys the rules:

(Circuit 1) The null set is in C.

(Circuit 2) If C1 and C2 are members of C where C1  C2 then C1 = C2.

C2 then C1 = C2.

A circuit in a graph can not be a proper subset of another circuit, so we are turning this property of circuits into a rule.

(Circuit 3) Suppose that C1 and C2 are distinct subsets of C, and e is a member of C1  C2 then there is a member of C3 in C such that:

C2 then there is a member of C3 in C such that:

C

3

(C

1

C

2) - e

Circuit 3 captures the property of circuits which says that when two circuits have an edge e in common, one can create a new circuit from the edges of the two circuits which does not use edge e.

Another approach to matroids is to say that given the set of edges E of a graph G, the set of circuits of G is the circuit set C of a matroid. This matroid is known as the cycle matroid of the graph. (Although one often sees the words circuit and cycle used interchangeably for the circuit concept in a graph, an advantage of using the word cycle for what I have called circuits in a graph is that one has the word circuit to use for the elements of the set C of a matroid.)

Now it may not be easy to see the connections between the abstraction of independence for a vector space and that of circuits for a graph, but they can be seen by using some definitions. Since in the independent set situation the special subsets of E that are in I are called independent, it seems natural to call a subset of E that is not in I to be dependent. Those sets in I which are maximal, that is, if one adds any element of E to them they are no longer in I, are called bases. One can prove that in any matroid the bases all have the same number of elements, just as is true for the bases of a vector space. If maximal independent sets are of interest, what about the minimal dependent sets? There are the sets with the property that if any element is dropped from them, one gets an independent set. We will define these sets, which are minimally dependent, to be circuits! Note that once the independent sets of a matroid are specified, the circuits are automatically determined, and conversely. Now using Independence rules 1 - 3, one can prove that the set of circuits just defined obeys the rules Circuit 1 - 3, thereby justifying the seemingly strange choice of name.

If one takes any spanning tree of a connected graph (e.g. a subgraph which is a tree and includes all the vertices) and adds one edge e of the graph that is not already in the tree, one gets a unique circuit. If one removes one edge of this circuit different from e, one gets a new spanning tree. Spanning trees all have the same number of vertices; this may remind you of the property that the bases of a vector space have. Furthermore, the way one can get from one spanning tree to another is similar to the way one can get from one independent set to another (Independence 2).

The phenomena which one observes - of ways in which ideas that come up in vector spaces (independence and span) and ideas that come up in graph theory (circuits and spanning trees) have certain similarities - is what drives the abstraction built into the theory of matroids. In addition to the two axiomatic approaches looked at here, independent sets and circuits, there are other axiomatic approaches involving rank functions, closure, and bases, all of which lead to the same place, a theory of matroids.

-

Introduction

-

Vector spaces and graphs

-

Multiple births

-

The development of a theory of matroids

-

Applications of matroids

-

References

.

.