PDFLINK |

Investigating the Use of Free, Open Source, Open Access, and Interactive Mathematics Textbooks in College Courses

Communicated by Notices Associate Editor William McCallum

While textbooks play an integral role in the teaching and learning of post-secondary mathematics and place a significant financial burden on students, surprisingly little is known about how students and instructors engage with them within an actual mathematics course. Indeed, what we know about textbook use comes primarily from individual interviews with a small number of participants in an artificial laboratory setting (e.g., 121820) or from surveys in a single institution (e.g., 519). This article reports on what we have learned about the use of textbooks written in PreTeXt by both students and instructors in the messy world of daily teaching and learning. The granularity provided by PreTeXt in constructing textbooks facilitates the generation of data that allow researchers to test intuitions that mathematicians might have about how students (and teachers) use textbooks.

Recent JavaScript add-ons in PreTeXt (https://pretextbook.org/, a markup language that facilitates the creation of open-access textbooks) allow us to unobtrusively observe how students interact with an electronic textbook at a fine level of detail. For instance, we can record what a reader is viewing at 1-second intervals, what interactive features of the text they open, and whether they spend time viewing definitions and proofs to suggest a careful reading or whether they come back to them after encountering subsequent problems. In addition to aggregating these data to note overall trends in student use, they serve as the basis for follow up conversations in which users narrate their progress through a particular section of their textbook.

Viewing data for instructor 411008: (a) full course.

Viewing data for instructor 411008: (b) one day, full textbook view.

Viewing data for instructor 411008: (c) one day view, one section.

Viewing data for instructor 411008: (d) one day view, one section, individual level.

1. Studying Textbook Use by Teachers and Students

The three textbooks in the project—Active Calculus (AC, 3), A First Course in Linear Algebra (FCLA, 1), and Abstract Algebra: Theory and Applications (AATA, 7)—were chosen because they are used in courses that tend to have different profiles of students in a mathematics program: calculus draws from a wide range of students across institutions seeking a variety of majors, including biology, chemistry, engineering, mathematics, physics, business, economics, and health-related fields. Linear algebra tends to be offered in either the first or second year of a major and mostly caters to mathematics and computer science majors; Beezer’s textbook is intended to be an introduction to proof course. Abstract algebra is largely taken by mathematics majors, including, secondary teachers, and tends to be completed by students in their third or fourth year of their program. The textbooks used in the project had features that promoted interaction: the ability to expand or hide content as needed (e.g., proofs or worked-out examples), Sage cells for complex computations in real time, links to interactive Desmos or GeoGebra animations, WeBWorK, text highlighting, and questions that can be answered directly into the textbook, and whose responses are immediately available to teachers and students. Some of these features are standard in PreTeXt textbooks (e.g., Sage cells, expanding and hiding content), whereas others (e.g., collecting student responses, highlighting) were available to the project test sites only. Each user had an ID that masked their identity.

PreTeXt identifies every feature in a textbook with a permanent ID that, among other things, makes it easier to collect data on what any given user is viewing and for how long: Javascript records any changes in viewing, including going to a new page, scrolling down so that a new element enters the viewing window, or whenever users click on specific items (e.g., examples, proofs) to open or close it. “Tracking is recorded every second for the current item, and then the information is sent to the server every 10s (or when the user leaves the page) and recorded on the server log” (15, p. 837). The recorded information also includes a user identification giving the total time users spent on any given item. This information is used to show use at various levels of detail, for an entire semester and for individual days and sections, and for all and individual users.Footnote1 Figure 1 illustrates several levels of this representation, which we call heatmaps. The representation shows as columns days in a semester, and as rows, sections in each textbook chapter. Each rectangle represents the cumulative viewings for an individual section on any given day (Figure 1a). Selecting March 23rd, we see the cumulative viewings of all the sections in that day (Figure 1b), and the cumulative viewings for each individual section in that day (Figure 1c); in the next level of detail, we see the views of individual users (Figure 1d), each of whom is identified with a different color.

A limitation of the method is that occasionally more than one item is visible, so we cannot be sure which is being viewed because we are not able to do eye tracking. In practice this has not been an issue.

PreTeXt removed several barriers to the study of how people use textbooks in naturalistic settings. With textbooks written in PreTeXt there is no need to use expensive equipment to track what people are viewing at any given moment; the viewing is done in real time and automatically, which will be useful as a recall mechanism for people to narrate how they interacted with a particular section of their textbooks: Did they view the definitions or the problems first? Did they spend most of their time in a proof or on the solutions to a problem? Pairing this information with multiple sources of data augments the appropriateness of inferences that can be made about how people use their textbooks (see the Appendix for details on the other data collected as part of the project). As this tracking can be done any time the textbook is used, rather than when the researchers ask the participants to use it, is possible to collect data from participants who do not have to be geographically close to the researchers, thus helping us learn, among other things, the times that people tend to use the textbook more or less frequently, and to obtain actual information about the most and least viewed features in the textbook. As textbooks are distributed for free and are accessible to anyone, it is possible to work with many participants simultaneously without a significant increase in cost; the viewing data can be complemented with standard techniques such as surveys, interviews, and observations so that researchers can build comprehensive data sets that can be used to explore the many ways in which textbooks are used in real classrooms by teachers and students as they go about their daily activities in a course.

With these data we have found minimal measurable effects of using these textbooks on student outcomes 211 and a wider range of student reading practices than previously reported 14. In addition, investigating the use of Reading Questions and Preview Activities, we have found that textbook authors intended a narrower set of uses of these features, for both students and instructors 913; moreover, students interact with the reading questions or preview activities and the surrounding material in the textbook in three primary ways, and those interactions account for 88% of all students’ interactions with the feature 8. We expand in this article on this last finding, as the information will be of benefit to authors as they craft textbooks which can better support teaching and learning.

2. Understanding the Use of One Interactive Feature in Depth

We chose to study the way in which students and teachers used an interactive feature embedded in the textbooks that we call a questioning device 1316 as a way to understand textbook use in general, but also because this feature was added to the textbooks with the goal of giving faculty a chance to reflect on their plans for the day and respond to students’ productions as they read material before class. A questioning device is simply a set of questions each followed by a box in which students type their answers directly into the textbook. They are called Reading Questions in the linear algebra and abstract algebra textbooks and Preview Activities in the calculus textbook. In Figure 2 we present an example of a student view and a teacher view for the reading questions in WILA, which stands for “What is linear algebra,” and it is a labeled section in 1.

Reading questions in the section “What Is Linear Algebra” 1 (a) student view; (b) partial teacher view.

These devices were designed to ensure that the students read the textbook before coming to class; once the students submit their answers, they are available for teachers to see. The teachers, upon reading the responses, may decide to change their lessons, perhaps add an example, or discuss a concept more thoroughly or skip an explanation that might not be needed. These are meant as a classroom assessment tool; the authors justify this choice in terms of time: the idea of these devices is not to overburden instructors with more work, but rather to give them a quick way to assess if and how well the class grasped ideas in the section they are about to teach. Although authors encourage instructors to give individual students some credit for completion to incentivize students into answering them before class, the devices are not meant to be graded for correctness.

2.1. How do users say they use the questioning devices?

To investigate the use of this device, we used the instrumental approach 17 which is useful to reveal the multiple ways in which an object can be used by identifying the different uses people give to the object. Central to the instrumental approach is the notion of instrument, which is defined as the pairing of an artifact and a scheme of use. Artifact refers to the object itself, whereas scheme of use refers to a set made of (a) situations, (b) goals, (c) actions, (d) rationales, and (e) potential inferences for repeated activity. A knife, for example is an artifact that might be used to spread butter (for example during breakfast) or can be used to tighten a loose screw (say of a pot handle). The object is the same, but the user (me) has generated different instruments to accomplish different goals, with different ways of using the knife, in different situations; it is likely that in future similar situations, the artifact may generate similar utilization schemes. The theorization, which emerged in the context of technology design, also alerts us that users of any artifact will not only use them in the ways that were anticipated by the designers, but that they will also invent new and unanticipated uses. Thus, the theory indicated, that we should expect our users—students and teachers—to invent new uses, creating different instruments from those envisioned by the textbook authors.

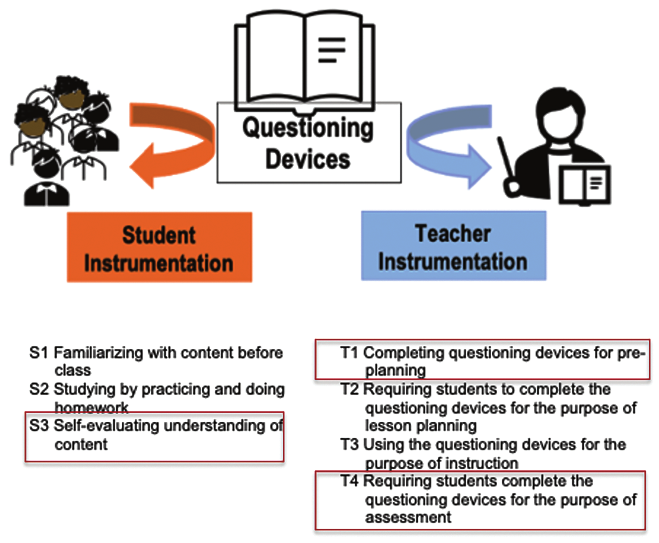

We applied this theorization to the study of questioning devices and used the reported data to identify schemes of use associated with the questioning devices given by students and teachers. Using the instructor data, we identified four different schemes of use of the questioning devices: (T1) Completing questioning devices for pre-planning, (T2) Requiring students to complete the questioning devices for the purpose of lesson planning, (T3) Using the questioning devices for the purpose of instruction, and (T4) Requiring students complete the questioning devices for the purpose of assessment 13. The first and fourth schemes were not anticipated by the author-designers. In Completing questioning devices for pre-planning, the teachers used the devices to test their own knowledge of the material; instructors said that they would read the textbook, and then attempt to answer the questions; if something was not as transparent, they would go back to the text and also make notes for the class; it seemed that this scheme was prompted by an interest in appearing competent before the students. In Requiring students to complete the questioning devices for the purpose of lesson planning, instructors had two goals: first to make sure students read the material and second, to identify misunderstandings that they needed to address in the lesson; instructors said that they used this information to alter their lesson plans as needed; the scheme was driven by their belief that students needed to struggle with the material in order to learn. In Using the questioning devices for the purpose of instruction, teachers dealt with the devices in class in one way or another: they assigned them to be completed during class; showed selected anonymous answers and discussed them in large groups, small groups, or individually; or directly provided the correct answers. In this way, the responses became part of the activity in the class. Teachers justified this use saying that they knew that some students did not have time outside of class to complete them, or because they thought that discussion and engagement in groups would be more educative for the students, or because they believed that identifying errors was a good strategy for learning. Finally, in Requiring students complete the questioning devices for the purpose of assessment, the teachers assigned the devices after the material was discussed in class and either collected the responses for a grade, created quizzes that included some version of the questions, or formulated the learning objectives for the content, objectives that were then used for designing assessments. In using the devices in this way, instructors sought to fulfill their responsibility of attesting that the students had learned the material, by giving them a grade, by giving them feedback, and by aligning their teaching to the implicit objectives in the questions.

Using the student data, we identified three different schemes of use: (S1) Familiarizing with content before class, (S2) Studying by practicing and doing homework, and (S3) Self-evaluating understanding of content 16. In Familiarizing with content before class, the students read the textbook and provided the answers they thought appropriate; students indicated that the reasons for this use was to be aware of what is going to happen, to fulfill a course requirement, or to bring questions to their teacher. In Studying by practicing and doing homework, the students answered the reading questions as part of completing their assigned homework because the answers provided insights into answering it. In Self-evaluating understanding of content, the students indicated using the reading questions to attest that they had understood and learned the content. When they felt they could not answer the questions, they read the material until they felt competent enough. They justified this use because of the importance of making sure that they had mastered the material. This scheme was not anticipated by the authors (see Figure 3). We are currently investigating how these schemes relate to each other, that is, whether there is any alignment between the schemes used by teachers and those used by the students.

Instrumentation of the questioning devices by students and teachers using the same textbook. Red boxes indicate schemes not anticipated by the authors.

2.2. How do users view material associated with questioning devices?

Once these schemes had been identified, we returned to the viewing data, to study the possibility of mapping these schemes to viewing patterns of the questioning devices. We applied a data mining technique, knowledge graphs 6, to 16,019 unique views of the questioning devices in HTML textbooks from 492 different students and 27 instructors from five cycles of data collection (Spring 2019 to Spring 2021). A knowledge graph is a data structure that allows for identifying relational knowledge by capturing interactions between individuals and entities. Knowledge graphs are typically used to establish the occurrence of consistent and repetitive behavior across the same student or across several unique students. Interactions are captured using networks of nodes and edges that reflect a particular behavior. In our case, nodes represented the names of the sections viewed and the time in minutes spent on them, directed edges represented a jump from one section to a new section, and undirected edges possible concurrent viewing of the sections within the same period. The viewing data are thus put in a graphical format providing a framework for data integration, unification, analytics, and sharing. The combination of nodes and edges creates knowledge graphs that are unique to each student and display common patterns that occur while viewing the textbooks.

Three types of evidence are needed to confirm that knowledge graphs would be useful to us. First, the knowledge graphs for different students following the same utilization scheme need to be consistent; second the knowledge graphs for the same student following the same utilization scheme need to be consistent; and third, each utilization scheme should lead to a community of unique students represented by unique knowledge graphs (network of knowledge graphs). We were able to identify three distinct clusters of knowledge graphs that matched the utilizations schemes we identified for students. In Figure 4 we present the first type of knowledge graphs that we were able to identify; it is characterized by a sequential viewing of the material to work on the reading questions. Figure 4 presents viewing data for one Active Calculus student on Sept 9 between 2:15pm and 3:15 pm. The top panel (Figure 4a) shows viewing related to the preview activities in Section 1.2 The notion of limit in a red outline, before the student changes section; the panel immediately below (Figure 4b) shows the knowledge graph that corresponds to this viewing. The third panel (Figure 4c) shows viewing of the same student, during the same period when the student moved to a new section, Section 1.4. The derivative function, to work on the corresponding preview activities; the panel immediately below (Figure 4d) shows the knowledge graph for this viewing. In the figure, “sec” means section and “ssec” means subsection. The intro for each section includes the Preview Activities.

Example of knowledge graph 1, sequential viewing; a black arrow indicates a jump to a forward section; a line a possible concurrent viewing; (a) heat map of sections viewed for the preview activities in Section 1.2 The notion of limit in a red outline, before the student changes section; (b) corresponding knowledge graph; (c) heat map during the same period, for preview activities in Section 1.4. The derivative function; (d) corresponding knowledge graph; “sec” means section; “ssec” means subsection; ssec-intro includes the Preview Activities.

The second type of knowledge graph is characterized by students working their way through the material and going back to other sections to check information, and concurrently viewing of the reading questions and the exercises in the section. An example is shown in Figure 5; in Figure 5a we see the viewing activity of a student using FCLA between 9:00am and 9:30am on the Bases section (red box, related to the reading questions); the students goes back to sections already viewed early (represented by the purple arrows); in Figure 5b, we see the corresponding typical knowledge graph; as the student gets to the reading questions they check back other sections, presumably to learn the associated material.

Example of knowledge graph 2, looping back to prior sections; a black arrow indicates a jump to a forward section; a line a possible concurrent viewing; a purple arrow indicates a jump to an earlier section; (a) heatmap viewing, in the Bases section; (b) corresponding knowledge graph.

The third type of knowledge graph is characterized by students starting their viewing of the reading questions or the preview activities, and checking the material of various sections, presumably to check what they know and to answer the questions, as shown in Figure 6.

Example of knowledge graph 3, starting with the reading questions or preview activities; a black arrow indicates a jump to a forward section; a line a possible concurrent viewing of sections; a purple arrow indicates a jump back to an earlier section; (a) student viewing associated with the Reading Questions in the AATA textbook, The Division Algorithm section; (b) corresponding knowledge graph.

We interpret that the first type of knowledge graphs (consecutive viewing of sections in the textbook) indicates that students might be familiarizing themselves with the content before they attempt the questions or problems in the section or chapter possibly because the work is required prior to class; we associate this kind of viewing with the first utilization scheme, familiarizing with content before class. We interpret that the second type of knowledge graphs (looping back to sections), indicates that as students work on the material and arriving at the reading questions or preview activities, they are going back to other sections, presumably to confirm or revisit information that is called for in the questions; in these graphs the exercises are also viewed, suggesting that the work might be related to preparing assignments for the class; we associate this kind of viewing with the second utilization scheme, studying and practicing with the material given. We interpret that the third type of graphs (start with the reading questions or preview activities) indicates that the student begins viewing the questions in the reading questions or preview activities to see whether they can answer them, and when they are unsure, they go to different sections in the textbook that might be related to the content they are addressing, and then repeatedly return to viewing the reading questions or preview activities, to confirm their answers; we associate this viewing with the third utilization scheme, students self-evaluating, finding out how much they can answer with the knowledge they have, and then checking relevant sections to corroborate or correct their thinking. The algorithm performs reasonably well in mapping existing knowledge networks to utilization schemes (see the Appendix for details), with close to 88% of knowledge graphs being classified and mapped to a utilization scheme. There is still work to be done to improve the classification process—the algorithm has difficulty creating knowledge graphs for cases in which many sections are viewed at the same time, something that can happen when students scroll rapidly over the sections. We also want to investigate whether within the unmapped networks are other patterns that could be identified as belonging to a different way of using the reading questions and preview activities.

From this work we confirmed first that students and teachers—as humans using tools—are always going to invent additional uses for artifacts designed with a particular purpose in mind, and second, that the textbook is a very important tool for their work. Now that the anticipated uses have been confirmed, designers may want to think again about the uses observed and think whether modifications are needed to maintain the spirit of the feature. The questioning devices are not meant to be an individual assessment tool of correct interpretation of the material, but rather a classroom assessment tool to gauge how the material was understood; and it is not intended to add more work for teachers; grading the responses is antithetical: if students are reading the material for the first time, it should be expected that they make mistakes as they answer the questions. These are important considerations that need to be addressed as designers continue to innovate the features in their textbooks.

3. What Lays Ahead

With a data set of this size, many more analyses are still underway. While we know more about how faculty use their textbook for planning 910 and how in one case, we saw significant changes in instruction 4, we have not yet investigated how instructors’ use can influence students’ use of their textbook. Our students report that they usually use their textbook as indicated by their teachers but corroborating this information and understanding the nature of the influence will require additional work. Other investigations pertain to broader analysis of textbook classroom use with the information from observations and interviews.

Yet other investigations pertain the data collected as students answer the reading questions and preview activities. How can that cumulative information about many students answering these questions across different institutions say about the questions themselves and about the nature of the understandings they elicit? How can that information be used by instructors as they prepare to teach their lesson? Currently we are working on a small project with a handful of instructors who are looking at data collected in prior cycles on two sections of reading questions in FCLA and deciding whether to change the questions, or add questions, or use prior answers during class, etc. We are aided by placing our textbooks in Runestone (https://runestone.academy/) a site that hosts free open-source textbooks, which, among many other things, makes removing and adding questions, easier. We are finding that analyzing the responses for the type of thinking that may be behind is very interesting; and faculty have been excited to see, and use, this information as well.

There are some ethical concerns that tend to be raised when this study is described. That something can be done, in our case, tracking student viewing, does not mean that it has to be done. When I describe the project, to non-educators they tend to raise their eyebrows and say that the tracking sounds creepy. It does sound creepy—we are to be reminded that social media and every website we visit, do this all the time! These uses need to be paired with the benefits for the individual, the communities, and the society to minimize potential risks. Our participants were informed of the study and of our protocols for anonymizing and making information confidential; they were informed about what the data were going to be used for, and in which contexts (e.g., writing articles). Our research team does not have access to any student personal information nor to information students did not give permission to share (e.g., grades). Likewise, faculty were invited to participate under the assumption that any research report would mask their identity (even our internal reports use only the instructors’ and students’ IDs). Participants have not shared with us negative reactions at seeing their individual heatmaps; on the contrary, they appear curious and puzzled about what the information the map provides about their own use of the textbook; in general, the heatmaps assist them in recalling their actions, which is what we are most interested in. Still, a broader, more transparent conversation, surrounding the ethics of collection and use of these data requires including students, instructors, authors, and textbook designers—precisely because of the open-source nature of these textbooks.

A major contribution of this investigation is the demonstration that this kind of work can be done ethically, in multiple contexts, and with actual students and instructors. Our study shows that the textbooks contribute to a network of resources that students and teachers use in their day-to-day work. The availability of the textbook in HTML format, in any device, made it easier for faculty who had back-to-back classes to check students’ responses to the reading questions on their phones as they were going to the classroom; students reported similar appreciation for the multiple platforms in which the textbook was available. Our participants did not report disruptions to their work while teaching remotely due to the COVID pandemic; although our observations were moderately affected, the work continued for all.

Finally, it might be important to rethink how we assess impact or effectiveness of these tools. Using an independent measure of knowledge was complicated by the reality that instructors do not cover all the content in their textbooks and that they do use different methods to teach the material. Devising an instrument that could account for change in knowledge would be a major undertaking. And it is unclear that having such a measure would help in identifying how a resource such as the textbook contributed the most to any measure of change in knowledge. Reducing the phenomenon might not yield interesting information.

Acknowledgments

This material is based upon work supported by the National Science Foundation (IUSE 1625223, 1626455, 1624634, 1624998, 1821706, 1821329, 1821509, 1821114). Any opinions, findings, and conclusions or recommendations expressed are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Robert Beezer, David Farmer, Tom Judson, Kent Morrison, David Austin, and Megan Littrell are collaborators in the project. Special thanks to Claire Boeck, Lynn Chamberlain, Saba Gerami, Palash Kanwar, Carlos Quiroz, the Undergraduate Research Opportunity Program, and the Research on Teaching Mathematics in Undergraduate Settings (RTMUS) research group at the University of Michigan for their invaluable support in various aspects of the project and their enthusiasm and feedback. Thanks also to the participants; without them this work would not have been possible.

The University of Michigan is located on the traditional territory of the Anishinaabe people. In 1817, the Ojibwe, Odawa, and Bodewadami Nations made the largest single land transfer to the University of Michigan. This was offered ceremonially as a gift through the Treaty at the Foot of the Rapids so that their children could be educated. Through these words of acknowledgment, their contemporary and ancestral ties to the land and their contributions to the University are renewed and reaffirmed.

References

- [1]

- R. A. Beezer, First course in linear algebra, Congruent Press, Gig Harbour, WA (2021). Available at https://books.aimath.org/.

- [2]

- C. Boeck, Quantitative modeling of UTMOST data (2021, June 18). Retrieved from https://dx.doi.org/10.7302/5842.

- [3]

- M. Boelkins, Active Calculus, CreateSpace Independent Publishing Platform (2021). Available at https://activecalculus.org/single/.

- [4]

- S. Gerami, V. Mesa, and Y. Liakos, Using an inquiry-oriented calculus textbook to promote inquiry: A case in university calculus (2021). Paper presented at the International Conference of the Psychology of Mathematics Education, Technion Institute of Technology, Haifa, Israel.

- [5]

- G. Gueudet and B. Pepin, Didactic contract at the beginning of university: A focus on resources and their use, International Journal of Research in Undergraduate Mathematics Education 4 (2018), no. 1, 56–73, DOI 10.1007/s40753-018-0069-6.

- [6]

- W. L. Hamilton, Representation learning on graphs: Methods and applications, Graph Representation IEEE (2017). Retrieved from https://www-cs.stanford.edu/people/jure/pubs/graphrepresentationieee17.pdf.

- [7]

- T. Judson, Abstract algebra: Theory and applications, Orthogonal Publishing L3C (2021). Available at http://abstract.pugetsound.edu/. HTML available at http://abstract.ups.edu/aata/.

- [8]

- P. Kanwar and V. Mesa, Mapping student real-time viewing of dynamic textbooks to their utilization schemes of questioning devices (accepted). Paper presented at the Conference of the European Research in Mathematics Education, Bolzano, Italy.

- [9]

- Y. Liakos, S. Gerami, V. Mesa, T. Judson, and Y. Ma, How an inquiry-oriented textbook shaped a calculus instructor’s planning, International Journal of Education in Mathematics, Science and Technology 53 (2021), no. 1, 131–150, DOI 10.1080/0020739X.2021.1961171.

- [10]

- V. Mesa, Lecture notes design by post-secondary instructors: resources and priorities, in R. Biehler, G. Gueudet, M. Liebendörfer, C. Rasmussen, and C. Winsløw (eds.), Practice-oriented research in tertiary mathematics education: New directions, Springer (in press).

- [11]

- V. Mesa, S. Gerami, and Y. Liakos, Exploring the relationship between textbook format and student outcomes in undergraduate mathematics courses (2020). Paper presented at the 23rd Annual Conference on Research on Undergraduate Mathematics Education, Boston, MA.

- [12]

- V. Mesa and B. Griffiths, Textbook mediation of teaching: An example from tertiary mathematics instructors, Educational Studies in Mathematics 79 (2012), no. 1, 85–107, DOI 10.1007/s10649-011-9339-9.

- [13]

- V. Mesa, Y. Ma, C. Quiroz, S. Gerami, Y. Liakos, T. Judson, and L. Chamberlain, University instructors’ use of questioning devices in mathematics textbooks: an instrumental approach, ZDM – Mathematics Education 53 (2021), no. 6, 1299–1311, DOI 10.1007/s11858-021-01296-5.

- [14]

- V. Mesa and A. Mali, Studying student actions with dynamic textbooks in university settings: The log as research instrument, For the Learning of Mathematics 40 (2020), no. 2, 8–14.

- [15]

- K. L. O’Halloran, R. A. Beezer, and D. W. Farmer, A new generation of mathematics textbook research and development, ZDM Mathematics Education 50 (2018), no. 5, 863–879.

- [16]

- C. Quiroz, S. Gerami, and V. Mesa, Student utilization schemes of questioning devices in undergraduate mathematics dynamic textbooks (accepted). Paper presented at the Conference of the European Research in Mathematics Education, Bolzano, Italy.

- [17]

- P. Rabardel, People and technology: a cognitive approach to contemporary instruments, Université Paris 8 (2002). https://hal.archives-ouvertes.fr/hal-01020705.

- [18]

- M. D. Shepherd, A. Selden, and J. Selden, University students’ reading of their first-year mathematics textbooks, Mathematical Thinking and Learning 14 (2010), no. 3, 226–256.

- [19]

- A. Weinberg, E. Wiesner, B. Benesh, and T. Boester, Undergraduate students’ self-reported use of mathematics textbooks, Problems, Resources, and Issues in Mathematics Undergraduate Studies 22 (2012), no. 2, 152–175, DOI 10.1080/10511970.2010.509336.

- [20]

- E. Wiesner, A. Weinberg, E. F. Fulmer, and J. Barr, The roles of textual features, background knowledge, and disciplinary expertise in reading a calculus textbook, Journal for Research in Mathematics Education 51 (2020), no. 2, 204–233.

Credits

Figures 1a–1d, Figure 4a, Figure 4c, Figure 5a, and Figure 6a are courtesy of David Farmer.

Figure 2a, Figure 2b, and Figure 3 are courtesy of Vilma Mesa.

Figure 4b, Figure 4d, Figure 5b, and Figure 6b are courtesy of Palash Kanwar.

Photo of Vilma Mesa is courtesy of the University of Michigan.